概述

python环境部署 -> 抓取当日汇率 -> 抓取过去一年汇率 -> 数据清洗 -> 进行预测 -> 整合资源

环境部署

使用PyCharm,进入后选择Interpreter,可以选择new,然后自行安装所需要的module。本文使用如下的库

(.venv) PS G:\nzd> pip install BeautifulSoup4

(.venv) PS G:\nzd> pip install pandas

(.venv) PS G:\nzd> pip install lxml

(.venv) PS G:\nzd> pip install DrissionPage

(.venv) PS G:\nzd> pip install numpy

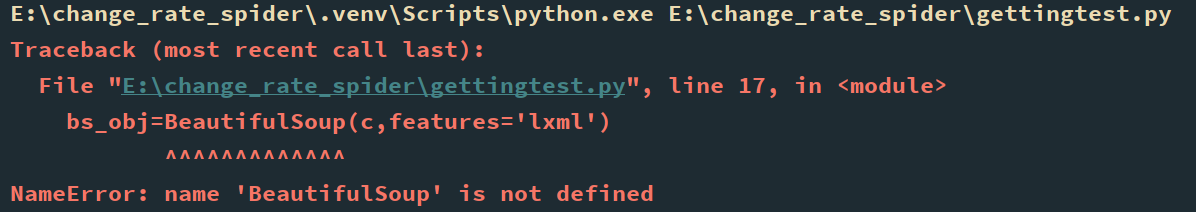

编写脚本,抓取当日汇率(初步探索,后续不会继续使用这段代码)

代码如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

| import pandas as pd

import numpy as np

from bs4 import BeautifulSoup

from urllib.request import urlopen

name=[]

xianchao_buy_price=[]

xianchao_sell_price=[]

xianhui_buy_price=[]

xianhui_sell_price=[]

date_waihui=[]

time_waihui=[]

for i in range(0,10):

if i==0:

url = urlopen('http://www.boc.cn/sourcedb/whpj/')

else:

url = urlopen('http://www.boc.cn/sourcedb/whpj/index_{}.html'.format(i))

web = url.read()

bs_obj = BeautifulSoup(web, features='lxml')

t = bs_obj.find_all('table')[1]

all_tr = t.find_all('tr')

all_tr.pop(0)

for r in all_tr:

all_td=r.find_all('td')

if all_td[0].text == '新西兰元' or all_td[0].text == '美元' or all_td[0].text == '澳大利亚元':

name.append(all_td[0].text)

xianhui_buy_price.append(all_td[1].text)

xianhui_sell_price.append(all_td[3].text)

xianchao_sell_price.append(all_td[4].text)

xianchao_buy_price.append(all_td[2].text)

date_waihui.append(all_td[6].text)

time_waihui.append(all_td[7].text)

test = pd.DataFrame({'货币名称': name,

'发布日期': date_waihui,

'发布时间': time_waihui,

'现钞买入价': xianchao_buy_price,

'现钞卖出价' : xianchao_sell_price,

'现汇买入价': xianhui_buy_price,

'现汇卖出价': xianhui_sell_price})

test.to_excel(r'E:\change_rate_spider\waihui.xlsx')

|

抓取过去一年汇率(每天都有很多条,当前卡在中行的验证码,每次手动输入…确实很蠢但没有深入探索如何自动输入)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

| import time

from bs4 import BeautifulSoup

from DrissionPage import ChromiumPage

import pandas as pd

def parse_table(page):

"""解析页面中的表格数据"""

soup = BeautifulSoup(page.html, 'html.parser')

target_div = soup.find('div', class_='BOC_main publish')

if not target_div:

raise ValueError("未找到指定的表格容器")

tbody_tag = target_div.find('tbody')

if not tbody_tag:

raise ValueError("未找到表格数据")

tr_tags = tbody_tag.find_all('tr')

data_list = []

for tr in tr_tags:

td_tags = tr.find_all('td')

if len(td_tags) == 7:

row_data = [td.text.strip() for td in [td_tags[6], td_tags[1], td_tags[3], td_tags[5]]]

data_list.append(row_data)

return data_list

def get_total_pages(page):

"""获取总页数"""

soup = BeautifulSoup(page.html, 'html.parser')

paginator = soup.select_one('#list_navigator ol')

if paginator:

pages = paginator.find_all('li')

for li in pages:

if '共' in li.text and '页' in li.text:

total_pages = int(''.join(filter(str.isdigit, li.text)))

return total_pages

raise ValueError("未找到总页数信息")

raise ValueError("未找到页码导航栏")

|